Ever wondered how well an AI model truly understands language? In this blog, we simplify the concept of perplexity — a key metric used to measure a model’s "confusion" when predicting the next word in a sentence. From candy stores to ice cream flavors, we break down complex equations into relatable everyday examples. Whether you're an AI newbie or just curious, you’ll learn how perplexity works, how it's calculated using Python, and why it's essential for building smarter language models. Dive in and demystify one of AI’s most powerful metrics!

In this blog I try to explain perplexity in a way everyone can understand. The equation looks complex but we will break it down here.

Imagine you walk into a candy store. You see hundreds of different candies in jars. Some are chocolates, some are gummy bears, and some are things you’ve never seen before but your favorite is a chocolate bar.

Now, you tell the shopkeeper, “Give me a candy, but make sure it’s one I’ll like!”

What do you think will happen?

If the shopkeeper knows you well, he might confidently give you a chocolate bar (because he remembers you love chocolates).

But if he has no clue what you like, he might be confused and just randomly pick something. Maybe a sour lemon candy! !

That’s perplexity in action.

What Is Perplexity in Simple Terms?

Perplexity is a fancy way of saying “How confused am I?”

If someone asks you a question and you** know exactly** what to say, you have low perplexity(because you’re not confused at all).

If you have no idea and just have to guess randomly, you have high perplexity (because you’re super confused).

In AI and language models, perplexity tells us how well a model understands something. A lower perplexity means the model is making smarter guesses, while a higher perplexity means it’s just guessing wildly.

The Ice Cream Example

Let’s say you go to an ice cream shop that has four flavors:

- Chocolate

- Vanilla

- Strawberry

- Mint

If I ask you to guess which one I will pick, you have a 1 in 4 chance (because I could choose any of them).

Now, imagine you already know that I love chocolate and always pick chocolate.

Your guess now becomes super easy — you’d say “chocolate” with confidence!

This means your perplexity is low because you’re not confused at all.

But if I like all the flavors equally, you have no clue which one I’ll pick. You’ll just have to guess.

That means high perplexity — you’re confused!

Perplexity in AI and Language Models

Now, let’s apply this to how AI models, like ChatGPT, Deepseek or Claude (or any LLM) understand words.

When a model reads a sentence, it tries to predict the next word based on what it has learned.

For example, if you say:

“The cat sat on the…”

The model knows that words like “mat”, “sofa”, or “floor” are likely choices.

But if you say:

“The cat sat on the spaceship and ordered a…”

Now, the model is more confused (because cats don’t usually order things on spaceships!).

If the model struggles to guess the next word, its perplexity is high.

If the model predicts the next word easily, its perplexity is low.

Let’s move to the Mathematical Side of Perplexity

Now that we’ve understood perplexity with candy and ice cream, let’s get a little more technical.

At the core of perplexity is entropy, a concept from information theory. Entropy measures uncertainty — or how “surprised” we are when we see new information.

Entropy (information theory) - Wikipedia In information theory, the entropy of a random variable quantifies the average level of uncertainty or information… en.wikipedia.org

What is Entropy?

Entropy is a measure of uncertainty in a system. If you always know what will happen next, the entropy is low. If you have no clue, entropy is high.

Entropy and Perplexity in Language Models

In AI and NLP, entropy measures how uncertain the model is when predicting the next word in a sentence.

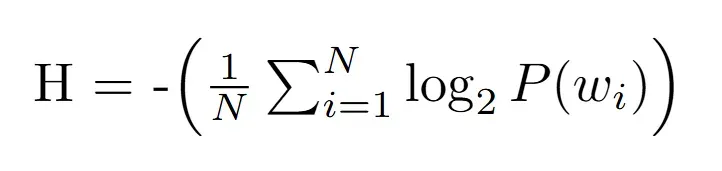

The Formula for Entropy (H)

Entropy

where:

Entropy

where:

N = total number of words in the sentence (or text sample) P(wi) = probability of each word wi in the sentence

What Does This Mean?

If the model is highly confident in predicting words (e.g., it assigns a probability of 1.0 to the correct word every time), entropy is low. If the model struggles and assigns equal probabilities to all possible words, entropy is high.

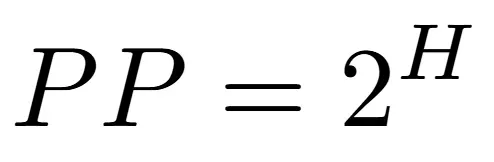

Now, What is Perplexity?

Perplexity (PP) is directly derived from entropy. It translates uncertainty into a more intuitive number:

Perplexity equation This equation means:

If entropy is low, perplexity is close to 1 (low confusion). If entropy is high, perplexity is very large (high confusion).

Perplexity in Real Life

The goal of AI models is to reduce perplexity — so they become better at predicting the right words!

Python Code to Calculate Perplexity

Now, let’s implement this in Python. Given a language model’s probability distribution over words, we compute the perplexity.

from openai import OpenAI

import os

os.environ['OPENAI_API_KEY'] = "sk-proj-******"

client = OpenAI()

response = client.chat.completions.create(

messages=[{

"role": "user",

"content": "Complete this sentence: It looks like",

}],

max_tokens=10,

logprobs=True,

model="gpt-4o-mini",

)

The above is we are asking the OpenAI model to complete a sentence.

import math

probabilities = {}

for token_logprob in response.choices[0].logprobs.content:

probability = math.exp(token_logprob.logprob)

print(f"{token_logprob.token}: log_prob -> {token_logprob.logprob:.6f} -> Probability: {probability:.6f}")

probabilities[token_logprob.token] = f"{probability:.4f}")

We calculate the probabilities from the logprobs

import numpy as np

def calculate_perplexity(probabilities):

"""

Calculates perplexity given a list of word probabilities.

:param probabilities: List of probabilities for each word in a sentence.

:return: Perplexity value.

"""

N = len(probabilities) # Number of words

entropy = -np.sum(np.log2(probabilities)) / N

perplexity = 2 ** entropy # Perplexity formula

return perplexity

word_probabilities = [float(i) for i in probabilities.values()]

print(word_probabilities)

perplexity_score = calculate_perplexity(word_probabilities)

print(f"Perplexity: {perplexity_score:.4f}")

This is the calculation of perplexity.

Trying out the example we mentioned here in the module and we run it with the above code:

example 1: The cat sat on the

Model output: mat.

Probabilities:

{'mat': '0.8446', '.': '0.8945'}

Perplexity: 1.1505

example 2: The cat sat on the spaceship and ordered a

Model output : 'treat from the automated snack dispenser, eagerly awaiting'

Probabilities:

{'t': '0.5995',

'reat': '0.8771',

' from': '0.9942',

' the': '0.9973',

' automated': '0.0122',

' snack': '0.5334',

' dispenser': '0.9966',

',': '0.9146',

' eagerly': '0.4826',

' awaiting': '0.5856'}

Perplexity: 2.0223

Interpreting the Results

If the model is confident (high probability values), perplexity will be low : 1st example. If the model is confused (low probability values), perplexity will be high : 2nd example.

Why Perplexity Matters in AI

1. Evaluating Language Models

Perplexity is used to measure how well an AI understands language. Lower perplexity means the model better predicts the next word.

2. Training Better Models

AI researchers train models to reduce perplexity over time. A drop in perplexity means the model is learning effectively.

3. Real-World Applications

Perplexity helps in machine translation, speech recognition, and chatbots. Most of the pre-trained models use perplexity scores to fine-tune their responses.

TL;DR (Too Long; Didn’t Read)

- Perplexity = How confused you are

- Low perplexity = Confident, smart guesses

- High perplexity = Wild guessing, confusion

- AI models with low perplexity are better at predicting words and understanding language.

- Python Code: We used a simple function to compute perplexity.

Let’s join hands and empower the AI community:

Looking for AI services or consultancies? Checkout our quality services at: https://synbrains.ai

Or connect with me directly at: https://www.linkedin.com/in/anudev-manju-satheesh-218b71175/

Buy me a coffee: https://buymeacoffee.com/anudevmanjusatheesh

LinkedIn community: https://www.linkedin.com/groups/14424396/

WhatsApp community: https://chat.whatsapp.com/ESwoYmD9GmF2eKqEpoWmzG